Trolltech's Qt 4.3.0 really begins to dazzle

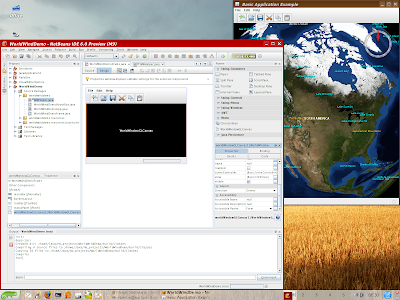

It's not hard to impress me with new visual software. I love eye candy, the flashier the better. So it should come as no surprise that I'm awfully impressed with the latest version of the Qt framework , 4.3.0. I installed it on three systems for a quick and dirty evaluation; algol (my XP Core Duo notebook), europa (my OpenSuse 10.2 Athlon XP system), and my daughter's Toshiba Satellite A135 notebook running Vista Home Premium. I've got screen shots from XP and Suse, but I didn't bother to do a Vista capture. I'll explain why later. Installing Qt Windows Open Source Edition is dead simple. Download and click on the installation binary. It will install the complete Qt set of tools and examples, and if you don't have it installed already, it will also install a copy of MinGW to compile applications with Qt. Note that the Windows version of the Qt framework does not have to be compiled. Everything is pre-built and ready to use. The screen shot below is the appli...